1. Introduction

2. Support vector machine

3. Quantum least squares support vector machine

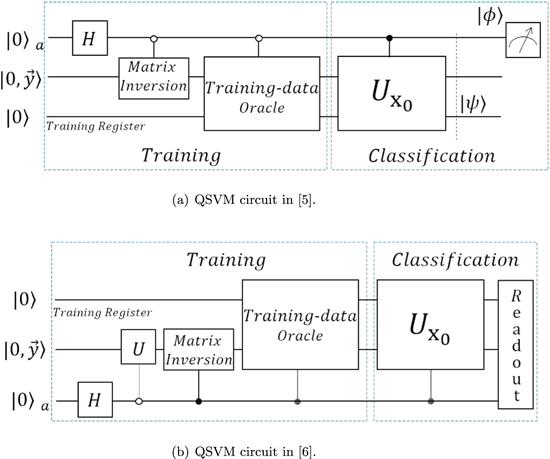

Figure 1. Brief circuit diagram of QSVM. The Matrix Inverse is employed to acquire the hyperplane parameters. The Training-data Oracle prepares ∣u⟩. The information of the query state is stored in ${U}_{{x}_{0}}$. The auxiliary qubit used to solve the equation is omitted. (a) $| \psi \rangle =\tfrac{1}{\sqrt{2}}(| 0\rangle | u\rangle +| 1\rangle | {x}_{0}\rangle )$ and $| \phi \rangle =\tfrac{1}{\sqrt{2}}(| 0\rangle -| 1\rangle )$. (b) U resets $| 0,\vec{y}\rangle $ to ∣00⟩. The QSVM algorithm in this section is based on (b). |

4. Variational quantum support vector machine

4.1. Training part

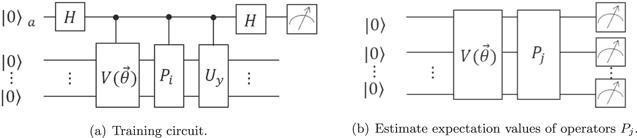

Figure 2. Brief circuit diagrams of training part of variational QSVM. (a) Calculating the molecular part of the loss function. $V(\vec{\theta })$ can be expressed as a product of L unitaries Vl(θl) sequentially acting on the input state ∣0...0⟩. As indicated, each unitary Vl(θl) can in turn be decomposed into a sequence of parametrized and unparametrized gates. Uy is a unitary matrix that satisfies $\langle 0...0| {U}_{y}=\langle 0,\vec{y}| =\tfrac{{\left(0;\vec{y}\right)}^{{\rm{T}}}}{| (0;\vec{y})| }.$ (b) Calculating the denominator of the loss function. The $\vec{\theta }$ is consistent with (a), Pj is the Pauli decomposition of F†F. Efficient derandomization Pauli measurements are employed at the end of the circuit, rather than random single-qubit measurements. |

Algorithm 1. Training variational QSVM |

| Input. An ancilla qubit initialized in $| 0\rangle $, a register initialized in $| 0...0\rangle $, a string of variable parameters $\vec{\theta }$, and set a suitable threshold ${E}_{\mathrm{cost}}^{\prime} $. |

| Step 1. Choose i = 1, repeat the circuit 2(a) M times, and record $({m}_{0}-{m}_{1})/M$. |

| Step 2. Choose j = 1, running circuit 2(b) and calculate the mean. |

| Step 3. After traversing all i and j, calculate the loss function ${E}_{\mathrm{cost}}.$ |

| Step 4. If ${E}_{\mathrm{cost}}\gt {E}_{\mathrm{cost}}^{\prime} $, update parameters $\vec{\theta }$, and then turn to Step 1; |

| else ${terminate}.$ |

4.2. Classification part

Figure 3. Brief circuit diagram of classification part of variational QSVM. The $\vec{\theta }$ of $V(\vec{\theta })$ is the one in the last cycle of algorithm 1. The Training-data Oracle prepares state ∣u⟩. ${U}_{{x}_{0}}$ contains the information of query state ∣x0⟩, and $\langle 0...0| {U}_{{x}_{0}}=\langle {x}_{0}| $. |

Algorithm 2. Classification variational QSVM |

| Input. An ancilla qubit initialized in $| 0\rangle $, a register initialized in $| 0{\rangle }^{\otimes n}$, a training register initialized in $| 0...0\rangle $, construct ${U}_{{x}_{0}}$ according to query qubit $| {x}_{0}\rangle $. |

| Step 1. Set $\epsilon =0.1$, repeat the circuit ${\epsilon }^{-2}$ times. If the calculation result satisfies equations ( |

| or ( |

| Step 2. Set $\epsilon =0.01$, repeat the circuit ${\epsilon }^{-2}$ times. If the calculation result satisfies equations ( |

| or ( |

| Step 3. Erase ε in equations ( |

4.3. Numerical experiment simulation

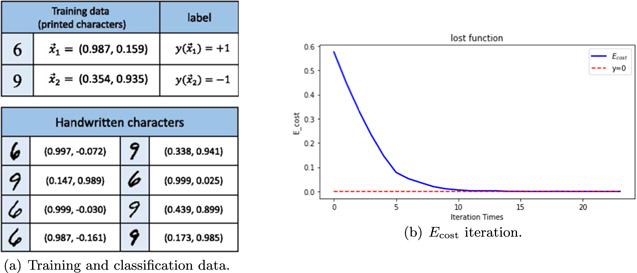

Figure 4. (a) Experimental data of [6], where the feature values are chosen as the vertical ratio and the horizontal ratio. The training data is in a standard font (Times New Roman). The arbitrary chosen handwritten samples for classification and their feature vectors. (b) Loss function image optimized by gradient descent penalty. The calculation result is simulated by Python. |

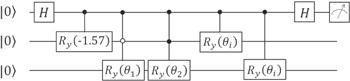

Figure 5. The classification circuit. The rotation angles ${\theta }_{1}=2\arctan \tfrac{{\left({x}_{1}\right)}_{2}}{{\left({x}_{1}\right)}_{1}}$, ${\theta }_{2}=2\arctan \tfrac{{\left({x}_{2}\right)}_{2}}{{\left({x}_{2}\right)}_{1}}$. The last two controlled Ry(θi) gates form ${U}_{{x}_{0}}$, with ${\theta }_{i}=-2\arctan \tfrac{{\left({x}_{i}\right)}_{2}}{{\left({x}_{i}\right)}_{1}}$, i = 3, 4,…,10. |

Table 1. The classification results of samples. In the two columns below the number of measurements, the left one is the number of times the auxiliary qubit measures 0 and the right is obtaining 1. |

| Samples\Times&Results | 100 | R | 10000 | R | ||

|---|---|---|---|---|---|---|

| x3 = (0.997, − 0.072) | 73 | 27 | 6 | 6405 | 3695 | 6 |

| x4 = (0.147, 0.989) | 22 | 78 | 9 | 3636 | 6364 | 9 |

| x5 = (0.999, − 0.030) | 82 | 18 | 6 | 7466 | 2534 | 6 |

| x6 = (0.987, − 0.161) | 68 | 32 | 6 | 7540 | 2460 | 6 |

| x7 = (0.338, 0.941) | 37 | 63 | 9 | 4048 | 5952 | 9 |

| x8 = (0.999, 0.025) | 62 | 38 | 6 | 6924 | 3076 | 6 |

| x9 = (0.439, 0.899) | 36 | 64 | 9 | 4562 | 5438 | 9 |

| x10 = (0.173, 0.985) | 30 | 70 | 9 | 4524 | 5476 | 9 |