1. Introduction

If $g:{\mathbb{R}}\to {\mathbb{R}}$ is a smooth function (the first derivative $g^{\prime} $ is a continuous function) such that $Z(g)\cap Z(g^{\prime} )=\varnothing $, then the composite $\delta \circ g$ is defined by:

2. Uncertainty regions of observables

Let $({{\boldsymbol{A}}}_{1},\ldots ,\,{{\boldsymbol{A}}}_{n})$ be an n-tuple of qudit observables acting on ${{\mathbb{C}}}^{d}$. The uncertainty region of such n-tuple $({{\boldsymbol{A}}}_{1},\ldots ,{{\boldsymbol{A}}}_{n})$, for the mixed quantum state ensemble, is defined by

It holds that

Note that ${\mathscr{P}}({\boldsymbol{A}})\subset {\mathscr{M}}({\boldsymbol{A}})\subset \mathrm{conv}({\mathscr{P}}({\boldsymbol{A}}))$. Here the first inclusion is apparently; the second inclusion follows immediately from the result obtained in [33]: for any density matrix $\rho \in {\rm{D}}\left({{\mathbb{C}}}^{d}\right)$ and a qudit observable ${\boldsymbol{A}}$, there is a pure state ensemble decomposition $\rho ={\sum }_{j}{p}_{j}| {\psi }_{j}\rangle \langle {\psi }_{j}| $ such that

Assume that ${\boldsymbol{A}}$ is an observable acting on ${{\mathbb{C}}}^{d}$. Denote the vector consisting of eigenvalues of ${\boldsymbol{A}}$ by $\lambda ({\boldsymbol{A}})$ with components being ${\lambda }_{1}({\boldsymbol{A}})\leqslant \cdots \leqslant {\lambda }_{d}({\boldsymbol{A}})$. It holds that

Assume that ${\boldsymbol{a}}:=\lambda ({\boldsymbol{A}})$ where ${a}_{j}:={\lambda }_{j}({\boldsymbol{A}})$. Note that ${\mathrm{Var}}_{\rho }({\boldsymbol{A}})=\mathrm{Tr}({{\boldsymbol{A}}}^{2}\rho )$ $-\mathrm{Tr}{({\boldsymbol{A}}\rho )}^{2}=\left\langle {{\boldsymbol{a}}}^{2},{{\boldsymbol{D}}}_{{\boldsymbol{U}}}\lambda (\rho )\right\rangle $ $-{\left\langle {\boldsymbol{a}},{{\boldsymbol{D}}}_{{\boldsymbol{U}}}\lambda (\rho )\right\rangle }^{2}$, where ${\boldsymbol{a}}={\left({a}_{1},\ldots ,{a}_{d}\right)}^{{\mathsf{T}}},{{\boldsymbol{a}}}^{2}={\left({a}_{1}^{2},\ldots ,{a}_{d}^{2}\right)}^{{\mathsf{T}}}$, and ${{\boldsymbol{D}}}_{{\boldsymbol{U}}}=\overline{{\boldsymbol{U}}}\circ {\boldsymbol{U}}$ (here ◦ stands for Schur product, i.e. entrywise product), and $\lambda (\rho )={\left({\lambda }_{1}(\rho ),\ldots ,{\lambda }_{d}(\rho )\right)}^{{\mathsf{T}}}$. Denote

| i | (i) If d = 2, $\begin{eqnarray*}\displaystyle \begin{array}{rcl}f({x}_{1},{x}_{2}) & = & {a}_{1}^{2}{x}_{1}+{a}_{2}^{2}{x}_{2}-{\left({a}_{1}{x}_{1}+{a}_{2}{x}_{2}\right)}^{2}\\ & = & {a}_{1}^{2}{x}_{1}+{a}_{2}^{2}(1-{x}_{1})-{\left({a}_{1}{x}_{1}+{a}_{2}(1-{x}_{2})\right)}^{2}\\ & = & {\left({a}_{2}-{a}_{1}\right)}^{2}\left[\displaystyle \frac{1}{4}-{\left({x}_{1}-\displaystyle \frac{1}{2}\right)}^{2}\right]\leqslant \displaystyle \frac{1}{4}{\left({a}_{2}-{a}_{1}\right)}^{2},\end{array}\end{eqnarray*}$ implying that ${f}_{\max }=\tfrac{1}{4}{\left({a}_{2}-{a}_{1}\right)}^{2}$ when ${x}_{1}={x}_{2}=\tfrac{1}{2}$. |

| ii | (ii) If $d\geqslant 3$, without loss of generality, we assume that ${a}_{1}\lt {a}_{2}\lt \cdots \lt {a}_{d}$, we will show that the function f takes its maximal value on the point $({x}_{1},{x}_{2},\ldots ,{x}_{d-1},{x}_{d})\,=(\tfrac{1}{2},0,\ldots ,0,\tfrac{1}{2})$, with the maximal value being $\tfrac{1}{4}{\left({a}_{d}-{a}_{1}\right)}^{2}=\tfrac{1}{4}{\left({\lambda }_{\max }({\boldsymbol{A}})-{\lambda }_{\min }({\boldsymbol{A}})\right)}^{2}$. Then, using the Lagrangian multiplier method, we let $\begin{eqnarray*}\displaystyle \begin{array}{l}L({x}_{1},{x}_{2},\cdots ,{x}_{d},\lambda )=\sum _{i=1}^{d}{a}_{i}^{2}{x}_{i}\\ \quad -\,{\left(\sum _{i=1}^{d}{a}_{i}{x}_{i}\right)}^{2}+\lambda \left(\sum _{i=1}^{d}{x}_{i}-1\right).\end{array}\end{eqnarray*}$ |

Next, we consider the case where $d=k+1$, i.e. the extremal value of $f({x}_{1},\ldots ,{x}_{k+1})$ on $\partial {{\rm{\Delta }}}_{k}$. If ${\boldsymbol{x}}\in {F}_{j}\subset {{\rm{\Delta }}}_{k}$, where $j\in \left\{2,\ldots ,k\right\}$, then ${f}_{k+1}({x}_{1},{x}_{2},\cdots ,{x}_{k+1})={f}_{k}({y}_{1},{y}_{2},$ $\cdots ,{y}_{k})={\sum }_{i=1}^{k}{b}_{i}^{2}{y}_{i}$ $-{\left({\sum }_{i=1}^{k}{b}_{i}{y}_{i}\right)}^{2},$ where ${b}_{1}={a}_{1},\cdots ,{b}_{i-1}\,={a}_{i-1},{b}_{i}={a}_{i+1},\cdots ,{b}_{k}={a}_{k+1},$ it is obvious that ${b}_{1}\lt {b}_{2}\,\lt \cdots \lt {b}_{k}$. By the previous assumption, we have

By comparing these extremal values, we know that

By using the spectral decomposition theorem to ${\boldsymbol{A}}$, we get that ${\boldsymbol{A}}={\sum }_{j=1}^{d}{a}_{j}| {a}_{j}\rangle \langle {a}_{j}| $. Denote $\left|\psi \right\rangle =\tfrac{\left|{a}_{1}\right\rangle +\left|{a}_{d}\right\rangle }{\sqrt{2}}$. Then we see that

Let $({{\boldsymbol{A}}}_{1},\ldots ,{{\boldsymbol{A}}}_{n})$ be an n-tuple of qudit observables acting on ${{\mathbb{C}}}^{d}$. Denote $v({{\boldsymbol{A}}}_{k}):=\tfrac{1}{2}({\lambda }_{\max }({{\boldsymbol{A}}}_{k})\,-{\lambda }_{\min }({{\boldsymbol{A}}}_{k}))$, where k=1, …, n and ${\lambda }_{\max /\min }({{\boldsymbol{A}}}_{k})$ stands for the maximal/minimal eigenvalue of ${\boldsymbol{A}}$. Then

The proof is easily obtained by combining proposition

3. PDFs of expectation values and uncertainties of qubit observables

For a given quantum observable ${\boldsymbol{A}}$ with simple spectrum $\lambda ({\boldsymbol{A}})=({\lambda }_{1}({\boldsymbol{A}}),\ldots ,{\lambda }_{d}({\boldsymbol{A}}))$, where ${\lambda }_{1}({\boldsymbol{A}})\,\lt \cdots \lt {\lambda }_{d}({\boldsymbol{A}})$, the PDF of $\langle {\boldsymbol{A}}{\rangle }_{\psi }$, where $\left|\psi \right\rangle $ a Haar-distributed random pure state on ${{\mathbb{C}}}^{d}$, is given by the following:

By performing Laplace transformation $(r\to s)$ of ${f}_{\langle {\boldsymbol{A}}\rangle }^{(d)}(r)$, we get that

3.1. The case for one qubit observable

For the qubit observable ${\boldsymbol{A}}$ defined by equation (

Note that

3.2. The case for two-qubit observables

| i | (i) If $\left\{{\boldsymbol{a}},{\boldsymbol{b}}\right\}$ is linearly independent, then the following matrix Ta,b is invertible, and thus $\begin{eqnarray*}\displaystyle \begin{array}{rcl}\left(\begin{array}{c}\tilde{\alpha }\\ \tilde{\beta }\end{array}\right) & = & {{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}}}^{\tfrac{1}{2}}\left(\begin{array}{c}\alpha \\ \beta \end{array}\right),\\ \mathrm{where}\,{{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}}} & = & \left(\begin{array}{cc}\left\langle {\boldsymbol{a}},{\boldsymbol{a}}\right\rangle & \left\langle {\boldsymbol{a}},{\boldsymbol{b}}\right\rangle \\ \left\langle {\boldsymbol{a}},{\boldsymbol{b}}\right\rangle & \left\langle {\boldsymbol{b}},{\boldsymbol{b}}\right\rangle \end{array}\right).\end{array}\end{eqnarray*}$ Thus we see that $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle }^{(2)}(r,s)=\displaystyle \frac{1}{{\left(2\pi \right)}^{2}\sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}}})}}{\displaystyle \int }_{{{\mathbb{R}}}^{2}}{\rm{d}}\tilde{\alpha }{\rm{d}}\tilde{\beta }\\ \,\times \,\exp \left({\rm{i}}((\tilde{r}-{\tilde{a}}_{0})\alpha +(\tilde{s}-{\tilde{b}}_{0})\tilde{\beta })\right)\displaystyle \frac{\sin \sqrt{\tilde{\alpha }+\tilde{\beta }}}{\sqrt{\tilde{\alpha }+\tilde{\beta }}}\\ \quad =\,\displaystyle \frac{1}{{\left(2\pi \right)}^{2}\sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}}})}}{\displaystyle \int }_{0}^{\infty }{\rm{d}}t\sin t{\displaystyle \int }_{0}^{2\pi }{\rm{d}}\theta \\ \,\times \,\exp \left({\rm{i}}t((\tilde{r}-{\tilde{a}}_{0})\cos \theta +(\tilde{s}-{\tilde{b}}_{0})\sin \theta )\right)\\ \quad =\,\displaystyle \frac{1}{(2\pi )\sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}}})}}{\displaystyle \int }_{0}^{\infty }{\rm{d}}t\sin {{tJ}}_{0}\\ \,\times \,\left(t\sqrt{{\left(\tilde{r}-{\tilde{a}}_{0}\right)}^{2}+{\left(\tilde{s}-{\tilde{b}}_{0}\right)}^{2}}\right),\end{array}\end{eqnarray*}$ where J0(z) is the so-called Bessel function of the first kind, defined by $\begin{eqnarray*}{J}_{0}(z)=\displaystyle \frac{1}{\pi }{\int }_{0}^{\pi }\cos (z\cos \theta ){\rm{d}}\theta .\end{eqnarray*}$ Therefore $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle }^{(2)}(r,s)=\displaystyle \frac{1}{2\pi \sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}}})}}{\displaystyle \int }_{0}^{+\infty }{\rm{d}}t\sin {{tJ}}_{0}\\ \quad \times \,\left(t\cdot \sqrt{(r-{a}_{0},s-{b}_{0}){{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}}}^{-1}\left(\begin{array}{c}r-{a}_{0}\\ s-{b}_{0}\end{array}\right)}\right),\end{array}\end{eqnarray*}$ where $\begin{eqnarray*}{\int }_{0}^{\infty }{J}_{0}(\lambda t)\sin (t){\rm{d}}t=\displaystyle \frac{1}{\sqrt{1-{\lambda }^{2}}}H(1-\left|\lambda \right|).\end{eqnarray*}$ Therefore $\begin{eqnarray*}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle }^{(2)}(r,s)=\displaystyle \frac{H(1-{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}}}(r,s))}{2\pi \sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}}})(1-{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}}}^{2}({\rm{r}},{\rm{s}}))}},\end{eqnarray*}$ where $\begin{eqnarray}{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}}}(r,s)=\sqrt{(r-{a}_{0},s-{b}_{0}){{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}}}^{-1}\left(\begin{array}{c}r-{a}_{0}\\ s-{b}_{0}\end{array}\right)}.\end{eqnarray}$ |

| ii | (ii) If $\left\{{\boldsymbol{a}},{\boldsymbol{b}}\right\}$ is linearly dependent, without loss of generality, let b = κ · a for some nonzero κ ≠ 0, then $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle }^{(2)}(r,s)=\displaystyle \frac{1}{{\left(2\pi \right)}^{2}}{\displaystyle \int }_{{{\mathbb{R}}}^{2}}{\rm{d}}\alpha {\rm{d}}\beta \\ \quad \times \,\exp \left({\rm{i}}((r-{a}_{0})\alpha +(s-{b}_{0})\beta )\right)\\ \quad \times \,\displaystyle \frac{\sin (a\left|\alpha +\beta \kappa \right|)}{a\left|\alpha +\beta \kappa \right|}.\end{array}\end{eqnarray*}$ Here $a=\left|{\boldsymbol{a}}\right|$. We perform the change of variables $(\alpha ,\beta )\to (\alpha ^{\prime} ,\beta ^{\prime} )$, where $\alpha ^{\prime} =\alpha +\kappa \beta $ and $\beta ^{\prime} =\beta $. We get its Jacobian, given by $\begin{eqnarray*}\det \left(\displaystyle \frac{\partial (\alpha ^{\prime} ,\beta ^{\prime} )}{\partial (\alpha ,\beta )}\right)=\left|\begin{array}{cc}1 & \kappa \\ 0 & 1\end{array}\right|=1\ne 0.\end{eqnarray*}$ |

For a pair of qubit observables ${\boldsymbol{A}}\,={a}_{0}{\mathbb{1}}+{\boldsymbol{a}}\cdot {\boldsymbol{\sigma }}$ and ${\boldsymbol{B}}={b}_{0}{\mathbb{1}}+{\boldsymbol{b}}\cdot {\boldsymbol{\sigma }}$, (i) if $\left\{{\boldsymbol{a}},{\boldsymbol{b}}\right\}$ is linearly independent, then the pdf of $(\langle {\boldsymbol{A}}{\rangle }_{\psi },\langle {\boldsymbol{B}}{\rangle }_{\psi })$, where $\psi \in {{\mathbb{C}}}^{2}$ a Haar-distributed pure state, is given by

| i | (ii) If $\left\{{\boldsymbol{a}},{\boldsymbol{b}}\right\}$ is linearly dependent, without loss of generality, let ${\boldsymbol{b}}=\kappa \cdot {\boldsymbol{a}}$, then $\begin{eqnarray*}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle }^{(2)}(r,s)=\delta ((s-{b}_{0})-\kappa (r-{a}_{0})){f}_{\langle {\boldsymbol{A}}\rangle }^{(2)}(r).\end{eqnarray*}$ |

The joint probability distribution density of the uncertainties $({{\rm{\Delta }}}_{\psi }{\boldsymbol{A}},{{\rm{\Delta }}}_{\psi }{\boldsymbol{B}})$ for a pair of qubit observables defined by equation (

Note that in the proof of theorem

Again, by using (

3.3. The case for three-qubit observables

For three-qubit observables, given by equation (

| i | (ii) If $\mathrm{rank}({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})=2$, i.e. $\left\{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}\right\}$ is linearly dependent, without loss of generality, we assume that $\left\{{\boldsymbol{a}},{\boldsymbol{b}}\right\}$ is linearly independent and ${\boldsymbol{c}}={\kappa }_{{\boldsymbol{a}}}\cdot {\boldsymbol{a}}+{\kappa }_{{\boldsymbol{b}}}\cdot {\boldsymbol{b}}$ for some ${\kappa }_{{\boldsymbol{a}}}$ and ${\kappa }_{{\boldsymbol{b}}}$ with ${\kappa }_{{\boldsymbol{a}}}{\kappa }_{{\boldsymbol{b}}}\ne 0$, then $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle ,\langle {\boldsymbol{C}}\rangle }^{(2)}(r,s,t)=\delta ((t-{c}_{0})\\ \quad -\,{\kappa }_{{\boldsymbol{a}}}(r-{a}_{0})-{\kappa }_{{\boldsymbol{b}}}(s-{b}_{0})){f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle }^{(2)}(r,s).\end{array}\end{eqnarray*}$ |

| ii | (iii) If $\mathrm{rank}({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})=1$, i.e. $\left\{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}\right\}$ is linearly dependent, without loss of generality, we assume that ${\boldsymbol{a}}$ are linearly independent and ${\boldsymbol{b}}={\kappa }_{{\boldsymbol{b}}{\boldsymbol{a}}}\cdot {\boldsymbol{a}},{\boldsymbol{c}}={\kappa }_{{\boldsymbol{c}}{\boldsymbol{a}}}\cdot {\boldsymbol{a}}$ for some ${\kappa }_{{\boldsymbol{b}}{\boldsymbol{a}}}$ and ${\kappa }_{{\boldsymbol{c}}{\boldsymbol{a}}}$ with ${\kappa }_{{\boldsymbol{b}}{\boldsymbol{a}}}{\kappa }_{{\boldsymbol{c}}{\boldsymbol{a}}}\ne 0$, then $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle ,\langle {\boldsymbol{C}}\rangle }^{(2)}(r,s,t)=\delta ((s-{b}_{0})\\ \quad -\,{\kappa }_{{\boldsymbol{b}}{\boldsymbol{a}}}(r-{a}_{0}))\delta ((t-{c}_{0})-{\kappa }_{{\boldsymbol{c}}{\boldsymbol{a}}}(r-{a}_{0})){f}_{\langle {\boldsymbol{A}}\rangle }^{(2)}(r).\end{array}\end{eqnarray*}$ |

| i | (i) If $\mathrm{rank}({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})=3$, then ${{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}}$ is invertible. By using Bloch representation, $| \psi \rangle \langle \psi | =\tfrac{1}{2}({{\mathbb{1}}}_{2}+{\boldsymbol{u}}\cdot {\boldsymbol{\sigma }})$, where $\left|{\boldsymbol{u}}\right|=1$. Then for $(r,s,t)=(\langle {\boldsymbol{A}}{\rangle }_{\psi },\langle {\boldsymbol{B}}{\rangle }_{\psi },\langle {\boldsymbol{C}}{\rangle }_{\psi })$ $=\,({a}_{0}+\left\langle {\boldsymbol{u}},{\boldsymbol{a}}\right\rangle ,{b}_{0}+\left\langle {\boldsymbol{u}},{\boldsymbol{b}}\right\rangle ,\left\langle {\boldsymbol{u}},{\boldsymbol{c}}\right\rangle )$, we see that $\begin{eqnarray*}(r-{a}_{0},s-{b}_{0},t-{c}_{0})=(\left\langle {\boldsymbol{u}},{\boldsymbol{a}}\right\rangle ,\left\langle {\boldsymbol{u}},{\boldsymbol{b}}\right\rangle ,\left\langle {\boldsymbol{u}},{\boldsymbol{c}}\right\rangle ).\end{eqnarray*}$ Denote ${\boldsymbol{Q}}:=({\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}})$, which is a 3 × 3 invertible real matrix due to the fact that $\left\{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}\right\}$ is linearly independent. Then ${{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}}={{\boldsymbol{Q}}}^{{\mathsf{T}}}{\boldsymbol{Q}}$ and $(r-{a}_{0},s-{b}_{0},t-{c}_{0})=\left\langle {\boldsymbol{u}}\right|{\boldsymbol{Q}}$, which means that $\begin{eqnarray*}{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)=\sqrt{\left\langle {\boldsymbol{u}}\right|{\boldsymbol{Q}}{\left({{\boldsymbol{Q}}}^{{\mathsf{T}}}{\boldsymbol{Q}}\right)}^{-1}{{\boldsymbol{Q}}}^{{\mathsf{T}}}\left|{\boldsymbol{u}}\right\rangle }=\left|{\boldsymbol{u}}\right|=1.\end{eqnarray*}$ This tells us an interesting fact that $(\langle {\boldsymbol{A}}{\rangle }_{\psi },\langle {\boldsymbol{B}}{\rangle }_{\psi },\langle {\boldsymbol{C}}{\rangle }_{\psi })$ lies at the boundary surface of the ellipsoid ${\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)\leqslant 1$, i.e. ${\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)=1$. This indicates that the PDF of $(\langle {\boldsymbol{A}}{\rangle }_{\psi },\langle {\boldsymbol{B}}{\rangle }_{\psi },\langle {\boldsymbol{C}}{\rangle }_{\psi })$ satisfies that $\begin{eqnarray*}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle ,\langle {\boldsymbol{C}}\rangle }^{(2)}(r,s,t)\propto \delta (1-{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)).\end{eqnarray*}$ Next, we calculate the following integral: $\begin{eqnarray*}\displaystyle \begin{array}{l}{\displaystyle \int }_{{{\mathbb{R}}}^{3}}\delta (1-{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)){\rm{d}}r{\rm{d}}s{\rm{d}}t\\ \quad =\,4\pi \sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})}.\end{array}\end{eqnarray*}$ Apparently $\begin{eqnarray*}\displaystyle \begin{array}{l}{\displaystyle \int }_{{{\mathbb{R}}}^{3}}\delta (1-{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)){\rm{d}}r{\rm{d}}s{\rm{d}}t\\ \quad =\,{\displaystyle \int }_{{{\mathbb{R}}}^{3}}\delta \left(1-\sqrt{\left\langle {\boldsymbol{x}}\left|{{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}}^{-1}\right|{\boldsymbol{x}}\right\rangle }\right)[{\rm{d}}{\boldsymbol{x}}].\end{array}\end{eqnarray*}$ Here ${\boldsymbol{x}}=(r-{a}_{0},s-{b}_{0},t-{c}_{0})$ and $[{\rm{d}}{\boldsymbol{x}}]={\rm{d}}r{\rm{d}}s{\rm{d}}t$. Indeed, by using the spectral decomposition theorem for the Hermitian matrix, we get that there is orthogonal matrix ${\boldsymbol{O}}\in \unicode{x000D8}(3)$ such that ${{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}}={{\boldsymbol{O}}}^{{\mathsf{T}}}\mathrm{diag}({\lambda }_{1},{\lambda }_{2},{\lambda }_{3}){\boldsymbol{O}}$ where ${\lambda }_{k}\gt 0(k=1,2,3)$. Thus $\begin{eqnarray*}\displaystyle \begin{array}{l}{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)=\left\langle {\boldsymbol{O}}{\boldsymbol{x}}\left|\mathrm{diag}({\lambda }_{1}^{-1},{\lambda }_{2}^{-1},{\lambda }_{3}^{-1})\right|{\boldsymbol{O}}{\boldsymbol{x}}\right\rangle \\ \quad =\,\left\langle {\boldsymbol{y}}\left|\mathrm{diag}({\lambda }_{1}^{-1},{\lambda }_{2}^{-1},{\lambda }_{3}^{-1})\right|{\boldsymbol{y}}\right\rangle \end{array}\end{eqnarray*}$ where ${\boldsymbol{y}}={\boldsymbol{O}}{\boldsymbol{x}}$. Thus $\begin{eqnarray*}\displaystyle \begin{array}{l}{\displaystyle \int }_{{{\mathbb{R}}}^{3}}\delta (1-{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)){\rm{d}}r{\rm{d}}s{\rm{d}}t\\ \quad =\,{\displaystyle \int }_{{{\mathbb{R}}}^{3}}\delta \left(1-\sqrt{\left\langle {\boldsymbol{y}}\left|\mathrm{diag}({\lambda }_{1}^{-1},{\lambda }_{2}^{-1},{\lambda }_{3}^{-1})\right|{\boldsymbol{y}}\right\rangle }\right)[{\rm{d}}{\boldsymbol{y}}].\end{array}\end{eqnarray*}$ Let ${\boldsymbol{z}}=\mathrm{diag}({\lambda }_{1}^{-1/2},{\lambda }_{2}^{-1/2},{\lambda }_{3}^{-1/2}){\boldsymbol{y}}$. Then $[{\rm{d}}{\boldsymbol{z}}]\,=\tfrac{1}{\sqrt{{\lambda }_{1}{\lambda }_{2}{\lambda }_{3}}}[{\rm{d}}{\boldsymbol{y}}]=\tfrac{1}{\sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})}}[{\rm{d}}{\boldsymbol{y}}]$ and $\begin{eqnarray*}\displaystyle \begin{array}{l}{\displaystyle \int }_{{{\mathbb{R}}}^{3}}\delta (1-{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)){\rm{d}}r{\rm{d}}s{\rm{d}}t\\ \quad =\,\sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})}{\displaystyle \int }_{{{\mathbb{R}}}^{3}}\delta \left(1-\left|{\boldsymbol{z}}\right|\right)[{\rm{d}}{\boldsymbol{z}}]=4\pi \sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})}.\end{array}\end{eqnarray*}$ Finally, we get that $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle ,\langle {\boldsymbol{C}}\rangle }^{(2)}(r,s,t)\\ \quad =\,\displaystyle \frac{1}{4\pi \sqrt{\det ({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})}}\delta (1-{\omega }_{{\boldsymbol{A}},{\boldsymbol{B}},{\boldsymbol{C}}}(r,s,t)).\end{array}\end{eqnarray*}$ |

| ii | (ii) If $\mathrm{rank}({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})=2$, then $\left\{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}\right\}$ is linearly dependent. Without loss of generality, we assume that $\left\{{\boldsymbol{a}},{\boldsymbol{b}}\right\}$ is independent. Now ${\boldsymbol{c}}={\kappa }_{{\boldsymbol{a}}}{\boldsymbol{a}}+{\kappa }_{{\boldsymbol{b}}}{\boldsymbol{b}}$ for some ${\kappa }_{{\boldsymbol{a}}},{\kappa }_{{\boldsymbol{b}}}\,\in {\mathbb{R}}$ with ${\kappa }_{{\boldsymbol{a}}}{\kappa }_{{\boldsymbol{b}}}\ne 0$. Thus $\begin{eqnarray*}\displaystyle \begin{array}{rcl}t-{c}_{0} & = & \langle {\boldsymbol{C}}{\rangle }_{\psi }-{c}_{0}=\left\langle \psi \left|{\boldsymbol{c}}\cdot {\boldsymbol{\sigma }}\right|\psi \right\rangle \\ & = & {\kappa }_{{\boldsymbol{a}}}\left\langle \psi \left|{\boldsymbol{a}}\cdot {\boldsymbol{\sigma }}\right|\psi \right\rangle +{\kappa }_{{\boldsymbol{b}}}\left\langle \psi \left|{\boldsymbol{b}}\cdot {\boldsymbol{\sigma }}\right|\psi \right\rangle \\ & = & {\kappa }_{{\boldsymbol{a}}}(r-{a}_{0})+{\kappa }_{{\boldsymbol{b}}}(s-{b}_{0}).\end{array}\end{eqnarray*}$ Therefore we get that $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle ,\langle {\boldsymbol{C}}\rangle }^{(2)}(r,s,t)=\delta ((t-{c}_{0})\\ \quad -\,{\kappa }_{{\boldsymbol{a}}}(r-{a}_{0})-{\kappa }_{{\boldsymbol{b}}}(s-{b}_{0})){f}_{\langle {\boldsymbol{A}}\rangle ,\langle {\boldsymbol{B}}\rangle }^{(2)}(r,s).\end{array}\end{eqnarray*}$ |

| iii | (iii) If $\mathrm{rank}({{\boldsymbol{T}}}_{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}})=1$, then $\left\{{\boldsymbol{a}},{\boldsymbol{b}},{\boldsymbol{c}}\right\}$ is linearly dependent. Without loss of generality, we assume that ${\boldsymbol{a}}$ is linearly independent and ${\boldsymbol{b}}={\kappa }_{{\boldsymbol{b}}{\boldsymbol{a}}}\cdot {\boldsymbol{a}},{\boldsymbol{c}}={\kappa }_{{\boldsymbol{c}}{\boldsymbol{a}}}\cdot {\boldsymbol{a}}$ for some ${\kappa }_{{\boldsymbol{b}}{\boldsymbol{a}}}$ and ${\kappa }_{{\boldsymbol{c}}{\boldsymbol{a}}}$ with ${\kappa }_{{\boldsymbol{b}}{\boldsymbol{a}}}{\kappa }_{{\boldsymbol{c}}{\boldsymbol{a}}}\ne 0$. Then we get the desired result by mimicking the proof in (ii). |

The joint probability distribution density of $({{\rm{\Delta }}}_{\psi }{\boldsymbol{A}},{{\rm{\Delta }}}_{\psi }{\boldsymbol{B}},{{\rm{\Delta }}}_{\psi }{\boldsymbol{C}})$ for a triple of qubit observables defined by equation (

Note that

4. PDF of uncertainty of a single qudit observable

For a given qutrit observable ${\boldsymbol{A}}$, acting on ${{\mathbb{C}}}^{3}$, with their eigenvalues ${a}_{1}\lt {a}_{2}\lt {a}_{3}$, the joint pdf of $(\langle {\boldsymbol{A}}{\rangle }_{\psi },\langle {{\boldsymbol{A}}}^{2}{\rangle }_{\psi })$, where $\left|\psi \right\rangle \in {{\mathbb{C}}}^{3}$, is given by

For a given qudit observable ${\boldsymbol{A}}$, acting on ${{\mathbb{C}}}^{4}$, with their eigenvalues ${a}_{1}\lt {a}_{2}\lt {a}_{3}\lt {a}_{4}$, the joint pdf of $(\langle {\boldsymbol{A}}{\rangle }_{\psi },\langle {{\boldsymbol{A}}}^{2}{\rangle }_{\psi })$, where $\left|\psi \right\rangle \in {{\mathbb{C}}}^{4}$, is given by

Denote by ${D}_{1}={\bigcup }_{i=1}^{2}{D}_{i}^{(1)},{D}_{2}={\bigcup }_{i=1}^{6}{D}_{j}^{(2)}$, and ${D}_{3}={\bigcup }_{k=1}^{2}{D}_{k}^{(3)}$, respectively. Thus

For a given qudit observable ${\boldsymbol{A}}$, acting on ${{\mathbb{C}}}^{4}$, with their eigenvalues ${a}_{1}\lt {a}_{2}\lt {a}_{3}\lt {a}_{4}$, the joint pdf of $(\langle {\boldsymbol{A}}{\rangle }_{\psi },\langle {{\boldsymbol{A}}}^{2}{\rangle }_{\psi })$, where $\left|\psi \right\rangle \in {{\mathbb{C}}}^{4}$, is given by

Denote by ${R}_{1}={\bigcup }_{i=1}^{2}{R}_{i}^{(1)},{R}_{2}={\bigcup }_{i=1}^{6}{R}_{j}^{(2)}$, and ${R}_{3}={\bigcup }_{k=1}^{2}{R}_{k}^{(3)}$, respectively. Thus

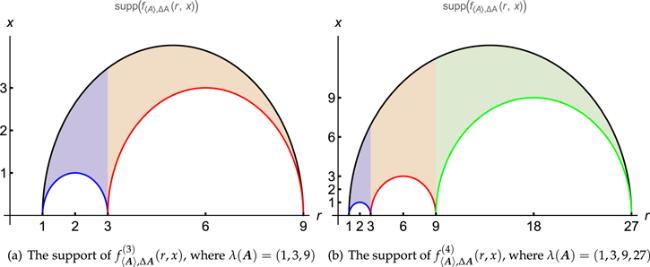

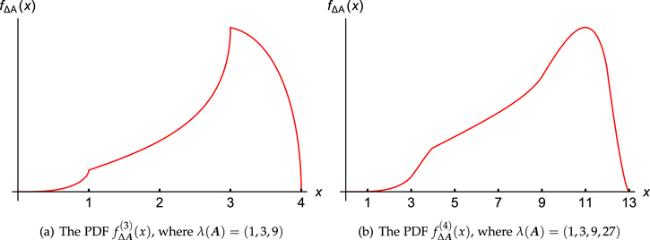

Figure 1. Plots of the supports of ${f}_{\langle {\boldsymbol{A}}\rangle ,{\rm{\Delta }}{\boldsymbol{A}}}^{(d)}(r,x)$ for qudit observables A. |

For a qudit observable ${\boldsymbol{A}}$, acting on ${{\mathbb{C}}}^{4}$, with the eigenvalues $\lambda ({\boldsymbol{A}})=(1,3,9,27)$. Still employing the notation in (

| i | (i) If $x\in [0,1]$, then $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{{\rm{\Delta }}{\boldsymbol{A}}}^{(4)}(x)=\displaystyle \frac{x}{33696}\left(9{\varepsilon }_{12}^{3}(x)+13{\varepsilon }_{23}^{3}(x)\right.\\ \quad \left.-12{\varepsilon }_{13}^{3}(x)+{\varepsilon }_{34}^{3}(x)-4{\varepsilon }_{24}^{3}(x)+3{\varepsilon }_{14}^{3}(x,)\right).\end{array}\end{eqnarray*}$ |

| ii | (ii) If $x\in [1,3]$, then $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{{\rm{\Delta }}{\boldsymbol{A}}}^{(4)}(x)=\displaystyle \frac{x}{33696}\left(13{\varepsilon }_{23}^{3}(x)-12{\varepsilon }_{13}^{3}(x)\right.\\ \quad \left.+{\varepsilon }_{34}^{3}(x)-4{\varepsilon }_{24}^{3}(x)+3{\varepsilon }_{14}^{3}(x,)\right).\end{array}\end{eqnarray*}$ |

| iii | (iii) If $x\in [3,4]$, then $\begin{eqnarray*}\displaystyle \begin{array}{l}{f}_{{\rm{\Delta }}{\boldsymbol{A}}}^{(4)}(x)=\displaystyle \frac{x}{33696}\left(-12{\varepsilon }_{13}^{3}(x)\right.\\ \quad \left.+{\varepsilon }_{34}^{3}(x)-4{\varepsilon }_{24}^{3}(x)+3{\varepsilon }_{14}^{3}(x,)\right).\end{array}\end{eqnarray*}$ |

| iv | (iv) If $x\in [4,9]$, then $\begin{eqnarray*}{f}_{{\rm{\Delta }}{\boldsymbol{A}}}^{(4)}(x)=\displaystyle \frac{x}{33696}\left({\varepsilon }_{34}^{3}(x)-4{\varepsilon }_{24}^{3}(x)+3{\varepsilon }_{14}^{3}(x)\right).\end{eqnarray*}$ |

| v | (v) If $x\in [9,12]$, then $\begin{eqnarray*}{f}_{{\rm{\Delta }}{\boldsymbol{A}}}^{(4)}(x)=\displaystyle \frac{x}{33696}\left(-4{\varepsilon }_{24}^{3}(x)+3{\varepsilon }_{14}^{3}(x)\right).\end{eqnarray*}$ |

| vi | (vi) If $x\in [12,13]$, then $\begin{eqnarray*}{f}_{{\rm{\Delta }}{\boldsymbol{A}}}^{(4)}(x)=\displaystyle \frac{x}{11232}{\varepsilon }_{14}^{3}(x).\end{eqnarray*}$ |

Figure 2. Plots of the PDFs ${f}_{{\rm{\Delta }}{\boldsymbol{A}}}^{(d)}(x)$ for qudit observables A. |

For a qudit observable ${\boldsymbol{A}}$, acting on ${{\mathbb{C}}}^{d}(d\gt 1)$, with eigenvalues $\lambda ({\boldsymbol{A}})=({a}_{1},\ldots ,{a}_{d})$, where ${a}_{1}\lt \cdots \lt {a}_{d}$, the supports of the PDFs of ${f}_{\langle {\boldsymbol{A}}\rangle ,\langle {{\boldsymbol{A}}}^{2}\rangle }^{(d)}(r,s)$ and ${f}_{\langle {\boldsymbol{A}}\rangle ,{\rm{\Delta }}{\boldsymbol{A}}}^{(d)}(r,x)$, respectively, given by the following:

Without loss of generality, we assume that ${\boldsymbol{A}}\,=\mathrm{diag}({a}_{1},\ldots ,{a}_{d})$ with ${a}_{1}\lt \cdots \lt {a}_{d}$. Let $\left|\psi \right\rangle ={\left({\psi }_{1},\ldots ,{\psi }_{d}\right)}^{{\mathsf{T}}}\in {{\mathbb{C}}}^{d}$ be a pure state and ${r}_{k}={\left|{\psi }_{k}\right|}^{2}$. Thus $({r}_{1},\ldots ,{r}_{d})$ is a d-dimensional probability vector. Then

5. Discussion and concluding remarks

For a pair of qubit observables $({\boldsymbol{A}},{\boldsymbol{B}})$ acting on ${{\mathbb{C}}}^{2}$, it holds that