In recent years, deep learning technology has triggered a new wave of artificial intelligence research [

8–

10]. At present, deep learning has achieved great success in many fields such as image classification, natural language processing, and object detection [

11,

12]. In addition to traditional research fields, the integration of artificial intelligence and natural sciences has received more and more attention [

13–

15]. At present, there have been many exciting research results in this cross-research field. For example, AlphaFold2 addresses the prediction of protein folding and three-dimensional structure [

16]. Another hot work in this field is the application of deep neural networks to solve PDEs [

17–

19]. The theoretical basis of this research is the universal approximation theorem [

20], that is, a multi-layer feedforward network with a sufficient number of hidden layer neurons can adequately approximate any continuous function. Mathematically, deep learning provides a tool for approximating high-dimensional functions. Traditional numerical methods often suffer from the curse of dimensionality, and deep neural networks can cope with this problem [

21,

22]. Because for neural networks, the amount of computation brought about by the increase in dimension increases linearly. At the same time, compared to traditional grid-based methods such as the finite difference method and finite volume method, deep learning is a flexible, gridless method that is simpler to implement. In addition, in terms of hardware, it is easier for deep neural networks to take advantage of the hardware advantages of a graphics processing unit (GPU), thereby improving the speed of solving PDEs. Relevant researchers have carried out a lot of research, and the results show that neural network has unique advantages in the research of solving PDE [

23–

26]. Some researchers use neural networks instead of traditional numerical discretization methods to approximate the solutions of PDEs, for example, Lagaris

et al used neural networks to solve the initial and boundary value problems [

27,

28]. There are also some researchers who use neural networks to improve the traditional numerical solutions of PDEs, thereby improving the computational efficiency of existing methods [

29,

30]. Among many works, one of the most representative achievements is the related work of physics-informed neural networks (PINNs) [

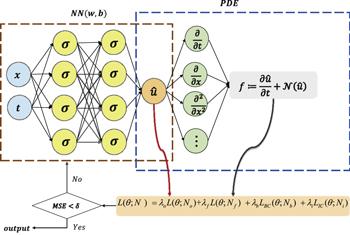

31–

35], which proposes a new composite loss function. The loss function consists of four parts: observation data constraints, PDE constraints, boundary condition constraints, and initial condition constraints. PINNs encode physics governing equations into neural networks, significantly improving the performance of neural network models. In terms of programming implementation, PINNs take full advantage of the automatic differentiation framework [

36]. Currently, automatic differentiation mechanisms are widely used in deep learning frameworks. Different from symbolic differentiation and numerical differentiation, automatic differentiation relies on the concept of computational graph, performs symbolic differentiation within each computational graph node, and uses numerical values to store the differential results between nodes. Therefore, the automatic differentiation mechanism is more accurate than numerical differentiation and more efficient than symbolic differentiation. After PINNs was proposed, a large number of researchers have studied and improved the original PINNs, proposed various improved PINNs and applied them in many fields, showing great application prospects [

37–

41]. Chen

et al have carried out a large number of simulation studies on localized wave solutions of the integrable equations using deep learning methods, which can effectively characterize their dynamical behaviors, and proposed the theoretical architecture and development direction of integrable deep learning, which has promoted the related research on nonlinear mathematical physics and integrable systems [

42–

47]. In recent years, with the rapid development of ocean sound field calculation, atmospheric pollution diffusion simulation, seismic wave inversion and prediction, etc [

48–

50], higher requirements are put forward for the accuracy and efficiency of numerical methods, and it is urgent to develop new methods and tools for solving PDE. The researchers have used physics-informed neural networks to solve the frequency-domain wave equation, simulated seismic multifrequency wavefields, and conducted in-depth research on wave propagation and full waveform inversions, and achieved a series of research results [

51–

55]. It is expected that PINNs can provide a new opportunity to solve a large number of scientific and engineering problems at the present time, and bring breakthroughs in the relevant research.