1. Introduction

2. Physics informed memory networks

2.1. Problem setup

2.2. Physics informed memory networks

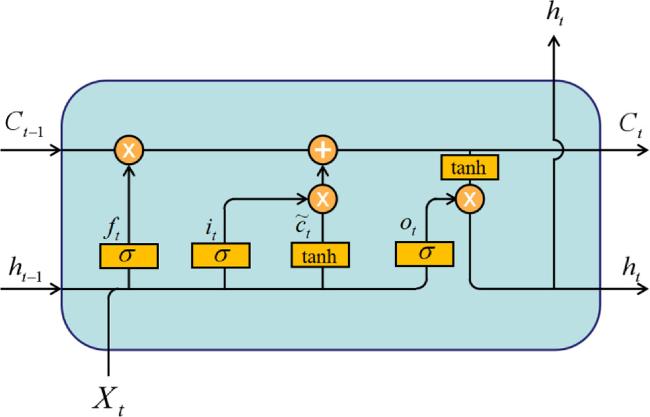

Figure 1. The general structure of LSTM. |

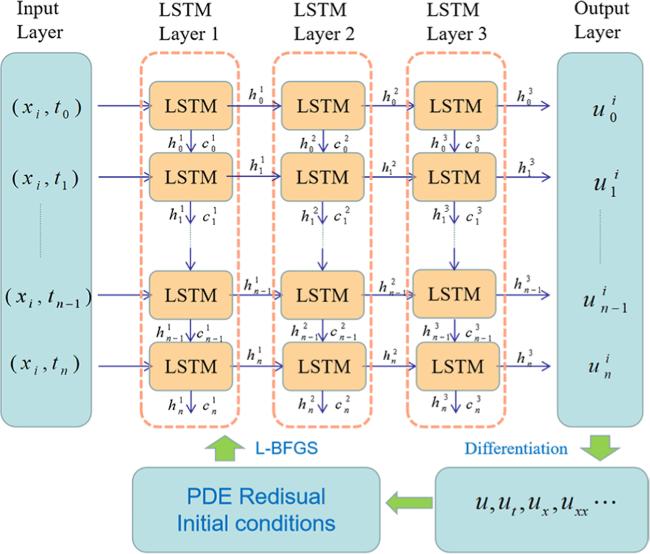

Figure 2. The general structure of the PIMNs. |

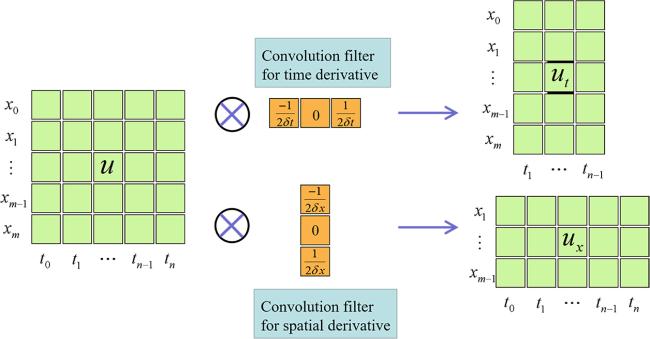

Figure 3. The convolution process of difference schemes. |

3. Numerical experiments

3.1. Case 1: The KdV equation

3.1.1. Comparison of different difference schemes for solving the KdV equation

Table 1. The KdV equation: relative L2 errors for different difference schemes. |

| Difference schemes | Forward difference | Backward difference | Central difference |

|---|---|---|---|

| 1 | 4.355 988 × 10−3 | 4.638 906 × 10−3 | 2.034 928 × 10−3 |

| 2 | 4.058 963 × 10−3 | 4.640 369 × 10−3 | 1.317 186 × 10−3 |

| 3 | 4.884 505 × 10−3 | 4.459 566 × 10−3 | 1.124 485 × 10−3 |

| 4 | 4.580 123 × 10−3 | 4.348 073 × 10−3 | 9.399 773 × 10−4 |

3.1.2. The effect of boundary conditions on solving the KdV equation

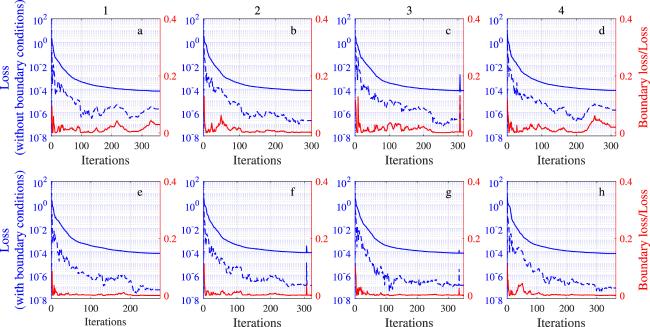

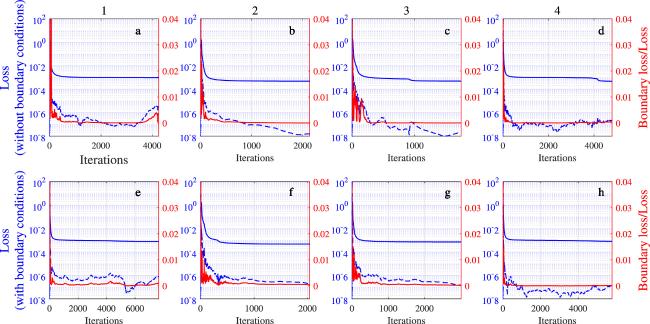

Figure 4. The loss curve: a–d are the loss curves without boundary conditions and e–h are the loss curves with boundary conditions. The blue solid line and the blue dashed line are MSE and MSEB (the left y-axis), respectively, and the red line is the ratio of MSEB to MSE (the right y-axis). |

Table 2. The KdV equation: relative L2 errors with and without boundary conditions. |

| Relative L2 errors | Relative L2 errors | |

|---|---|---|

| (without boundary conditions) | (with boundary conditions) | |

| 1 | 1.690 036 × 10−3 | 9.738 059 × 10−4 |

| 2 | 8.357 186 × 10−4 | 1.799 449 × 10−3 |

| 3 | 1.661 154 × 10−3 | 1.724 861 × 10−3 |

| 4 | 1.835 814 × 10−3 | 1.678 390 × 10−3 |

3.1.3. The effect of network structure for solving the KdV equation

Table 3. The KdV equation: relative L2 errors for different network structures. |

| Neurons /layers | 2 | 3 | 4 | 5 |

|---|---|---|---|---|

| 10 | 1.795 948 × 10−3 | 1.375 186 × 10−3 | 1.483 687 × 10−3 | 1.562 139 × 10−3 |

| 30 | 9.063 210 × 10−4 | 1.149 931 × 10−3 | 1.229 779 × 10−3 | 1.364 718 × 10−3 |

| 50 | 1.162 169 × 10−3 | 5.524 451 × 10−4 | 1.071 582 × 10−3 | 1.361 776 × 10−3 |

| 70 | 1.129 416 × 10−3 | 1.078 128 × 10−3 | 1.045 698 × 10−3 | 8.195 887 × 10−4 |

3.1.4. The effect of mesh size on solving the KdV equation

Table 4. The KdV equation: relative L2 errors for different mesh size. |

| Spatial points /time points | 50 | 100 | 150 | 200 |

|---|---|---|---|---|

| 500 | 9.534 459 × 10−4 | 5.069 412 × 10−4 | 5.014 334 × 10−4 | 5.628 230 × 10−4 |

| 1000 | 7.947 627 × 10−4 | 8.739 067 × 10−4 | 1.447 893 × 10−3 | 8.306 117 × 10−3 |

| 1500 | 1.884 557 × 10−3 | 2.239 517 × 10−3 | 1.932 972 × 10−3 | 3.545 602 × 10−3 |

| 2000 | 1.442 287 × 10−2 | 9.473 998 × 10−3 | 1.581 677 × 10−2 | 1.531 911 × 10−2 |

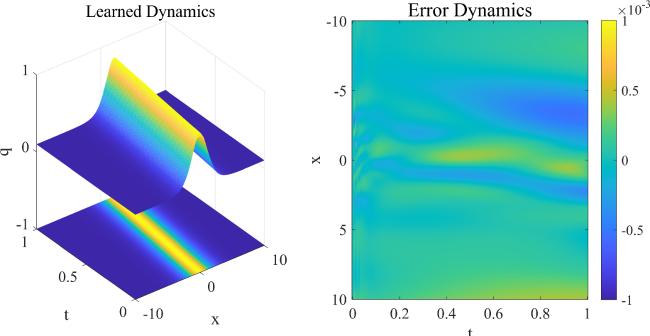

Figure 5. The traveling wave solution of the KdV equation: the dynamic behavior of learned solution and the error density diagram. |

3.1.5. Comparison of the PINNs and the PIMNs for the KdV equation

Table 5. The KdV equation: relative L2 errors for the PINNs and the PIMNs. |

| PINNs | PIMNs without boundary conditions | PIMNs with boundary conditions | ||||

|---|---|---|---|---|---|---|

| Relative L2 error | Parameters | Relative L2 error | Parameters | Relative L2 error | Parameters | |

| 1 | 1.977 253 × 10−3 | 10 401 | 7.569 623 × 10−4 | 10 808 | 5.168 963 × 10−4 | 10 808 |

| 2 | 2.700 721 × 10−3 | 15 501 | 6.007 163 × 10−4 | 15 368 | 6.046 472 × 10−4 | 15 368 |

| 3 | 1.685 079 × 10−3 | 20 601 | 5.175 313 × 10−4 | 20 728 | 5.448 766 × 10−4 | 20 728 |

3.2. Case 2: Nonlinear Schrödinger equation

3.2.1. Comparison of different difference schemes for solving the nonlinear Schrödinger equation

Table 6. The nonlinear Schrödinger equation: relative L2 errors for different difference schemes. |

| Difference schemes | Forward difference | Backward difference | Central difference |

|---|---|---|---|

| 1 | 2.604 364 × 10−3 | 3.259 175 × 10−3 | 1.330 293 × 10−2 |

| 2 | 3.527 666 × 10−3 | 3.842 570 × 10−3 | 2.265 705 × 10−3 |

| 3 | 2.735 975 × 10−3 | 1.453 902 × 10−2 | 2.476 216 × 10−3 |

| 4 | 1.529 878 × 10−2 | 4.381 561 × 10−3 | 1.333 741 × 10−2 |

3.2.2. The effect of boundary conditions on solving the nonlinear Schrödinger equation

Figure 6. The loss curve: a–d are the loss curves without boundary conditions and e–h are the loss curves with boundary conditions. The blue solid line and the blue dashed line are MSE and MSEB (the left y-axis), respectively, and the red line is the ratio of MSEB to MSE (the right y-axis). |

Table 7. The nonlinear Schrödinger equation: relative L2 errors with and without boundary conditions. |

| Relative L2 errors | Relative L2 errors | |

|---|---|---|

| (without boundary conditions) | (with boundary conditions) | |

| 1 | 1.352 908 × 10−2 | 1.012 920 × 10−1 |

| 2 | 2.350 028 × 10−3 | 2.357 040 × 10−3 |

| 3 | 2.757 807 × 10−3 | 2.894 889 × 10−3 |

| 4 | 2.364 573 × 10−3 | 8.701 724 × 10−2 |

3.2.3. The effect of network structure for solving the nonlinear Schrödinger equation

Table 8. The nonlinear Schrödinger equation: relative L2 errors for different network structures. |

| Neurons/Layers | 2 | 3 | 4 | 5 |

|---|---|---|---|---|

| 10 | 2.548 948 × 10−3 | 9.138 814 × 10−4 | 1.605 489 × 10−3 | 1.609 296 × 10−3 |

| 30 | 2.434 387 × 10−3 | 2.285 506 × 10−3 | 7.020 965 × 10−4 | 2.375 666 × 10−3 |

| 50 | 2.686 936 × 10−3 | 5.575 241 × 10−4 | 5.776 256 × 10−4 | 2.827 150 × 10−3 |

| 70 | 2.246 318 × 10−3 | 6.590 603 × 10−3 | 2.362 953 × 10−3 | 2.365 898 × 10−3 |

3.2.4. Effect of mesh size on solving the nonlinear Schrödinger equation

Table 9. The nonlinear Schrödinger equation: relative L2 errors for different mesh sizes. |

| Spatial points\Time points | 50 | 100 | 150 | 200 |

|---|---|---|---|---|

| 500 | 1.082 217 × 10−3 | 1.035 790 × 10−3 | 1.109 075 × 10−3 | 1.281 616 × 10−3 |

| 1000 | 8.084 908 × 10−3 | 8.685 522 × 10−4 | 1.072 378 × 10−3 | 8.839 646 × 10−4 |

| 1500 | 6.154 895 × 10−4 | 8.865 785 × 10−4 | 9.732 668 × 10−4 | 6.674 587 × 10−4 |

| 2000 | 6.780 904 × 10−4 | 6.010 067 × 10−4 | 7.655 602 × 10−3 | 9.272 919 × 10−3 |

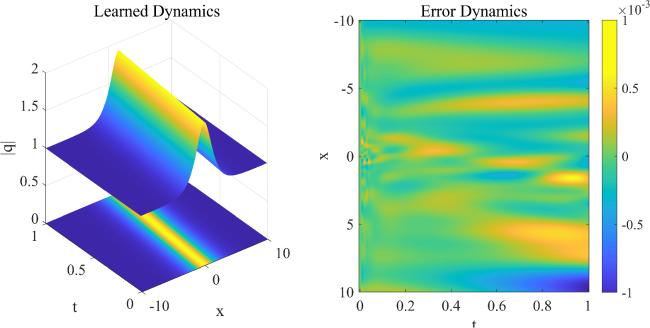

Figure 7. The traveling wave solution of the nonlinear Schrödinger equation: the dynamic behavior of learned solution and the error density diagram. |

3.2.5. Comparison of the PINNs and the PIMNs for nonlinear Schrödinger equation

Table 10. The nonlinear Schrödinger equation: relative L2 errors for the PINNs and the PIMNs. |

| PINNs | PIMNs without boundary conditions | PIMNs with boundary conditions | ||||

|---|---|---|---|---|---|---|

| Relative L2 error | Parameters | Relative L2 error | Parameters | Relative L2 error | Parameters | |

| 1 | 1.037 740 × 10−3 | 10 452 | 2.203 071 × 10−3 | 11 024 | 2.994 355 × 10−3 | 11 024 |

| 2 | 1.794 310 × 10−3 | 15 552 | 5.195 681 × 10−4 | 15 624 | 3.743 909 × 10−1 | 15 624 |

| 3 | 1.440 035 × 10−3 | 20 652 | 2.324 897 × 10−3 | 21 024 | 2.287 124 × 10−3 | 21 024 |